The novel traveling exhibition opens to the public for the first time ever in Heidelberg beginning on January 29, 2022 @ MAINS.

Con Espressione!

program

Credits

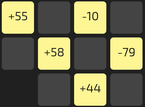

Take the role of a conductor. Move your hand over the sensor on the desk and play with the volume and speed of the piece you hear. Move the slider to get support from our Artificial Intelligence (AI) musician.

Music expresses emotions, feelings, and human sensations. This exhibit allows you to explore the difference between a cold, inexpressive, machine-like reproduction of a piece and a more “human” interpretation produced by an AI that was trained by hundreds of recorded performances. The higher the value on the slider, the more freedom the machine has to deviate from the prescribed parameters. It adjusts the notes’ loudness, timing and articulation to be slightly different from what you conduct, to make the music more lively and less “mechanical”. But too much of this is also problematic, as you will see… This is a true cooperation: you control loudness and tempo with your hand, the computer adds its own details and deviations.

The github repository for the user interface is here.